2024~2025 SUBMERGED

Robot History

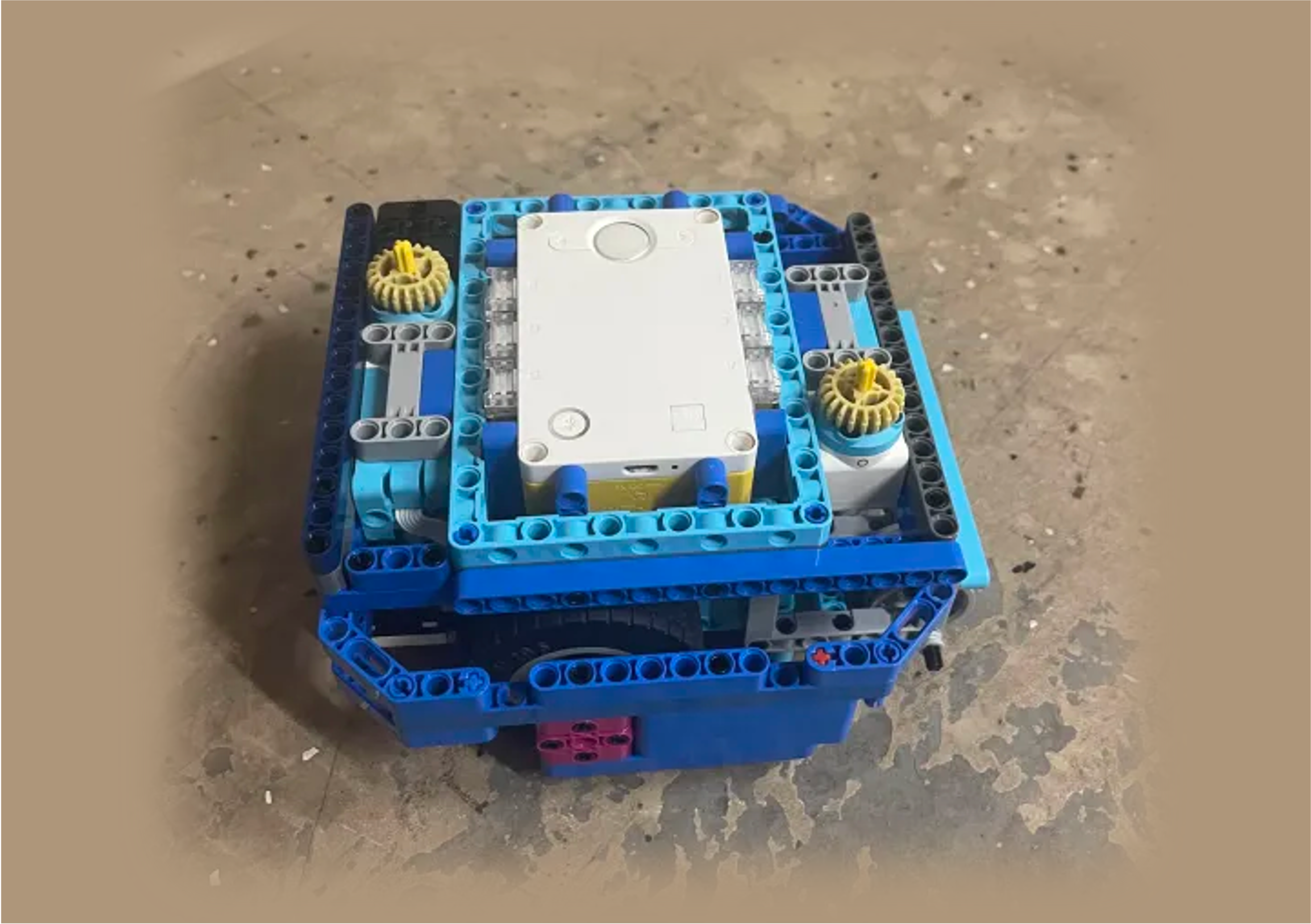

Blue Whale: Blue Whale was designed with two small motors on either side to operate attachments, allowing us to tackle missions on opposite sides of the field. However, we discovered that missions are rarely positioned that way, and using both motors often required relocating the gear mechanism to the opposite side, which was inconvenient. Additionally, the robot's overall size was too large and not compact enough, making it less efficient and harder to maneuver.

Blue Scorpion: Blue Scorpion used two motors, but we designed it to support four different attachment outputs. We were inspired by William Frantz, who built the first four-output robot. In our design, one motor is used to switch between outputs, while the other powers the selected attachment. However, this setup allows only one output to operate at a time, and the switching process can cause unintended movement, which leads to inaccuracy.

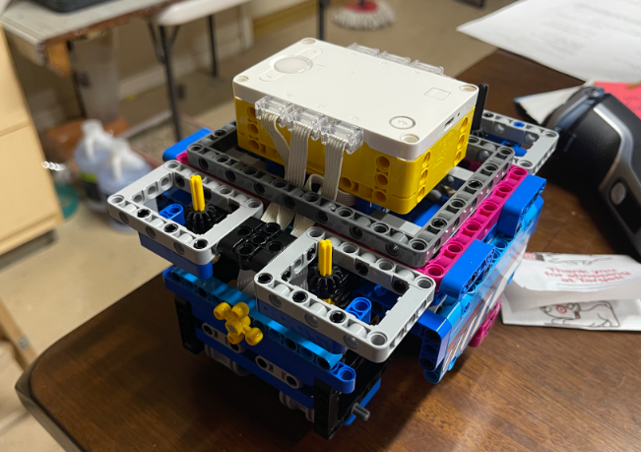

Cyber Truck: Cyber Truck uses two small motors and two large motors. We chose soft EV3 wheels to improve traction and increase driving accuracy. Since the SPIKE ball wheel isn’t compatible with our design, we added three balancers for stability. To save time during runs, we mounted light sensors on top of the robot to automatically switch attachment programs based on color cues.

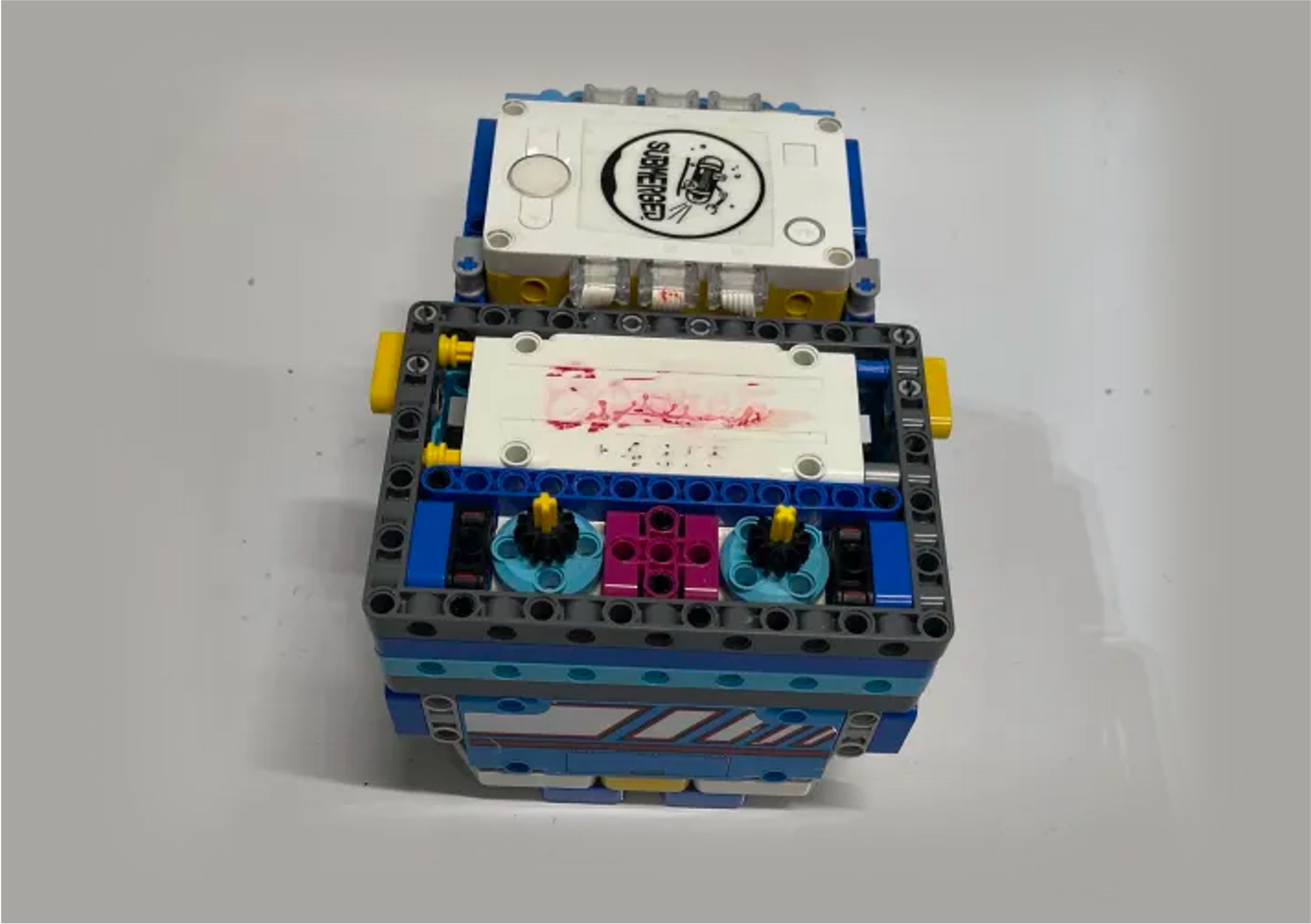

Black Panther: Black Panther is our final robot, developed after the Submerged season, and it features several key improvements. We added four motors, but unlike Blue Scorpion, these motors are linked, allowing multiple attachments to operate simultaneously. They are also positioned more strategically, making it easier to build and mount attachments—solving many of the challenges we faced in earlier designs.

Strategy Iterations

Mission Sequence

Mission Sequence

•A. Reef Segments, Krill and Water Sample at two sides stuck the paths of other missions, need be collected firstly.

•B. Feeding the whale (Mission 12) should after all five krills have been collected to the right home region.

•C. Sample collection (Mission 14) should be before Research Vessel (Mission 15)

•D. Send over the submersible should be as late as possible to make sure it arrives closer to the opposing field.

Design Iterations

We know that it would be difficult to find the best strategy at the beginning. So we divided the whole strategy design procedure into four phases:

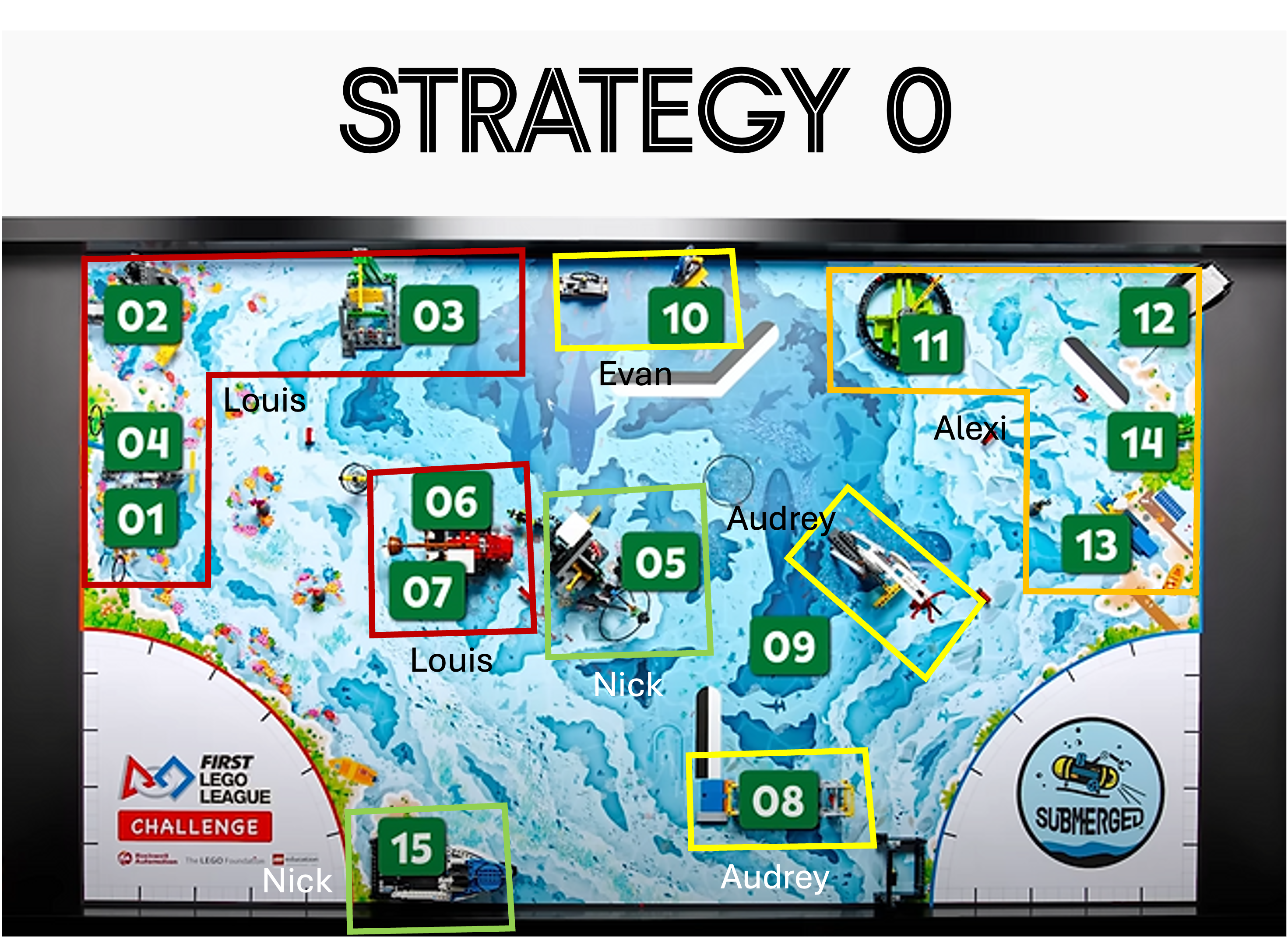

•Strategy v0 (Before scrimmage): We initialized the attachment design and try to familiar with missions. We focused on finding a good way to finish all missions, not considering much about mission combination and time limitations.

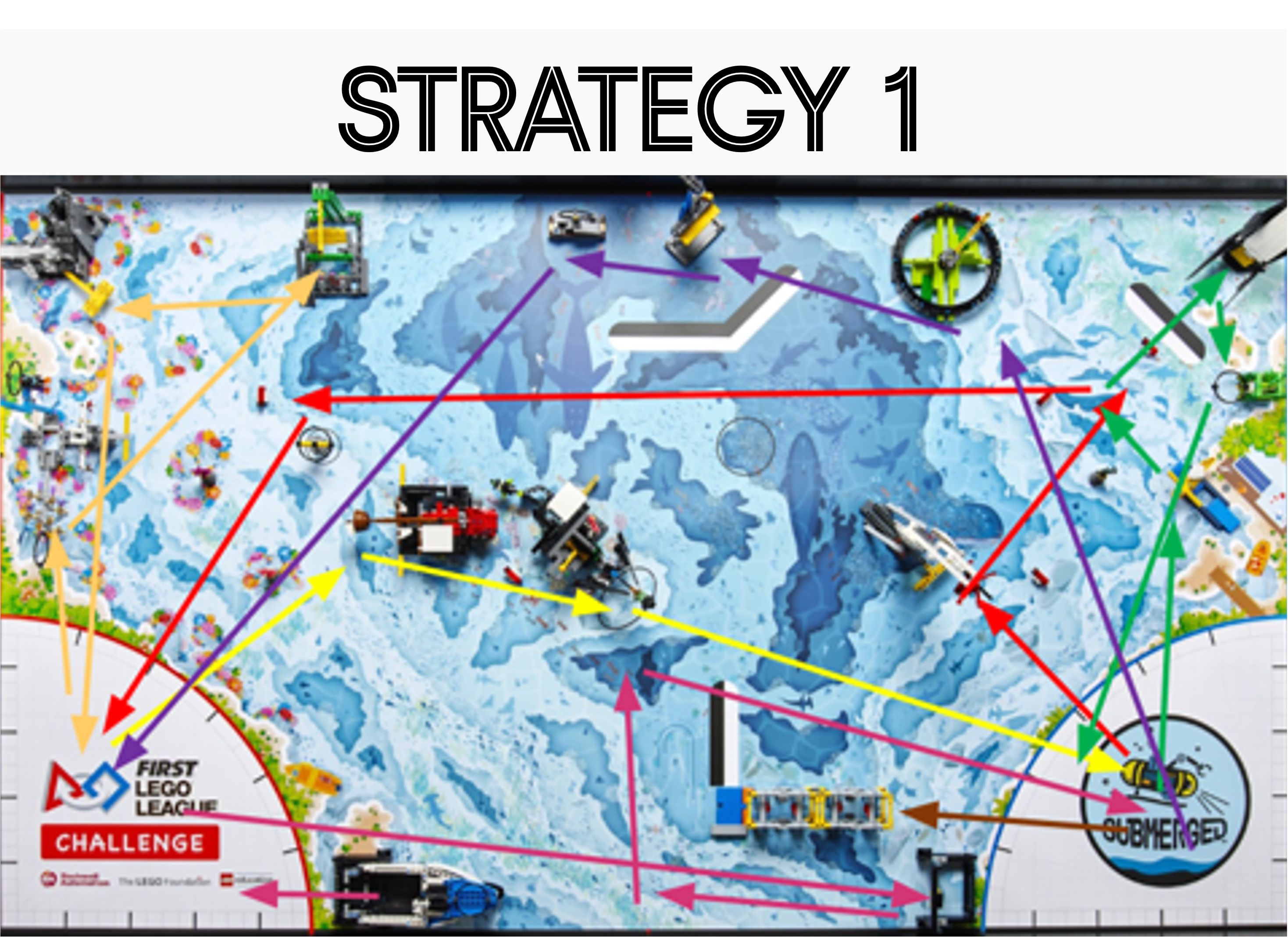

•Strategy v1 (Before qualifying): We began to combine different missions and focus on we can get score as much as possible and still not considering too much about the time limitation.

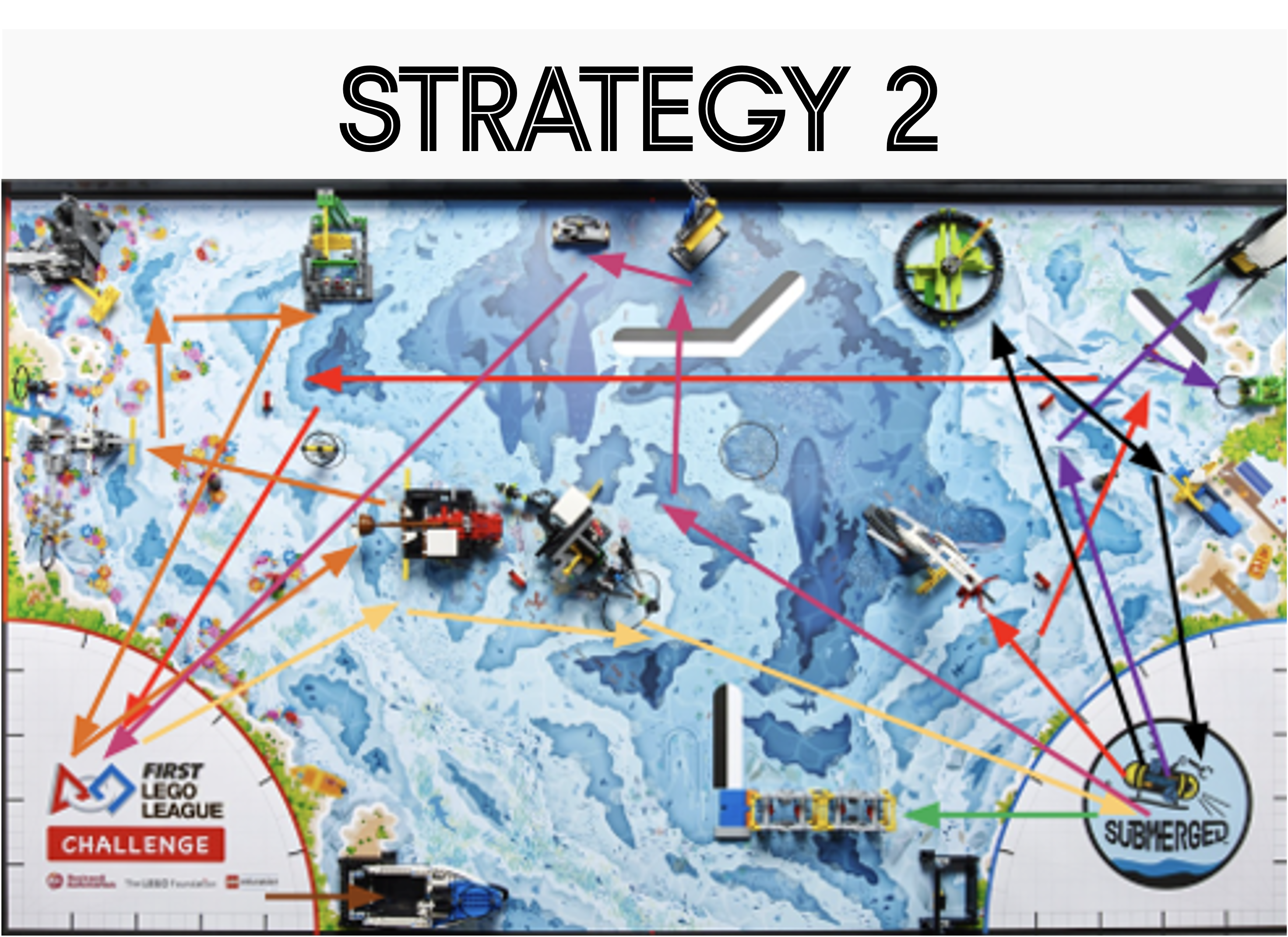

•Strategy v2 (Before state championship): We further combined attachments/missions and focused on figuring out the most efficient way to achieve as many points as possible in 2.5min. Our goal was to be above 500 points.

•Strategy v3 (Ongoing): We will start to integrate more missions and optimize our performance to achieve 620 within the 2.5min. Using python to code (This is what we wish to achieve if we make it to the world championship).

Strategy Designs

We have many Strategy Designs and each one has different attributes and ideal objectives.

Strategy 0: Strategy 0 was our starting point for the Submerged season. It was mainly about getting familiar with the missions. Each team member was assigned 1–3 missions and created their own attachments. At this stage, we weren’t focused on time or starting from the home area—we were just aiming to complete as many missions as possible. With Strategy 0, we were able to score up to 480 points.

Strategy 1: Strategy 1 was a strong starting plan. Our main goal was to find ways to complete all the missions and maximize our score, without focusing too much on the time limit. As a result, this strategy had a high point potential, but it wasn’t efficient within the 2.5-minute match time. It included 8 separate runs, which made it difficult to finish everything in time.

Strategy 2: Strategy 2 was a major improvement over Strategy 1. It achieved a high score of 535 points while managing time more effectively. By combining several missions from Strategy 1 into fewer runs, we were able to save time and increase efficiency. We completed all missions except for Trident, Changing Shipping Lanes, and a few samples.

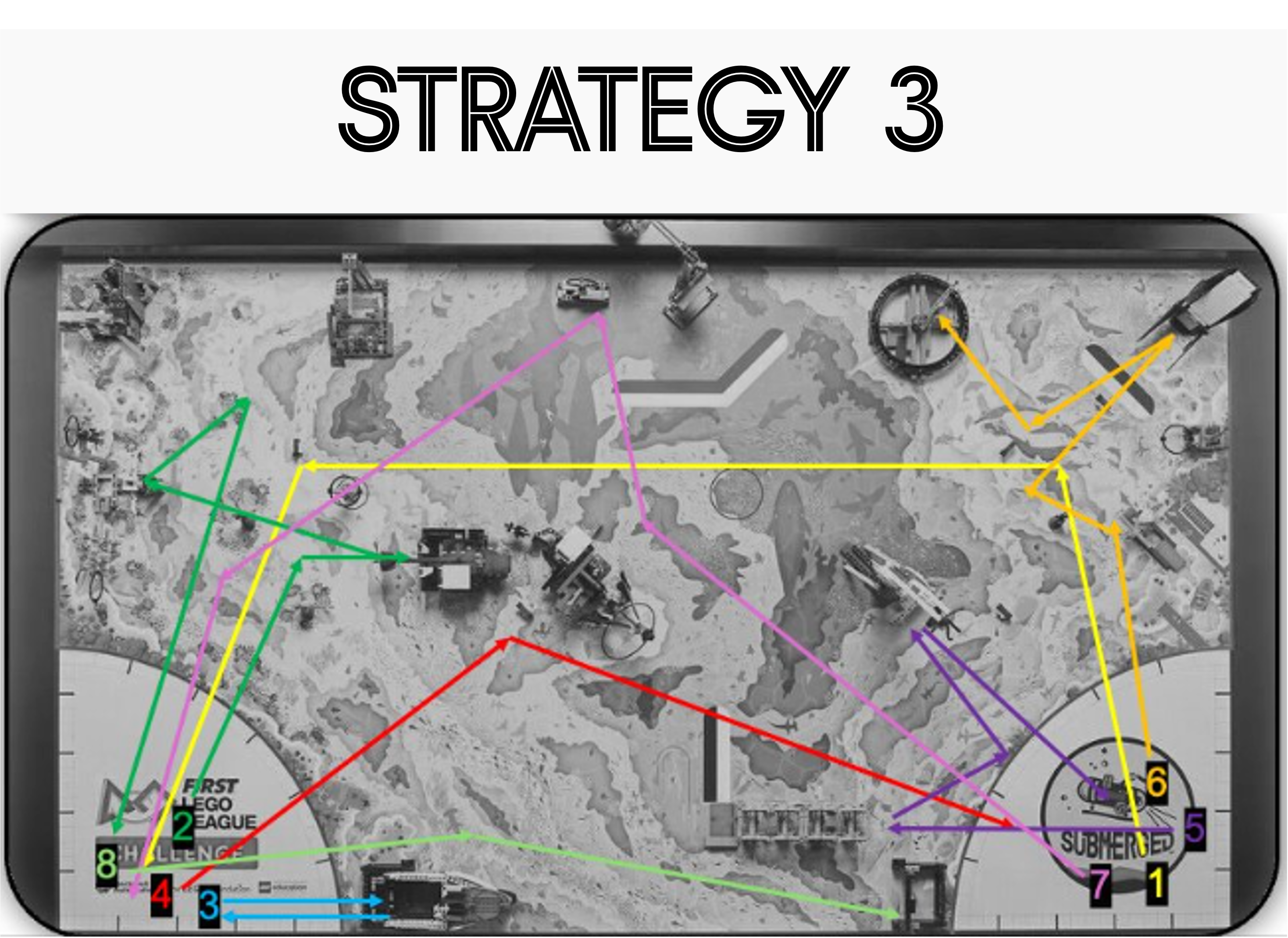

Strategy 3: Strategy 3 is our final and most successful strategy for the Submerged season. We increased our score from 535 to 620 points by optimizing our runs and improving efficiency. To stay within the 2-minute 30-second time limit, we created a new rule: each run must earn at least 5 points per second. This helped us stay focused on both speed and scoring. At Worlds, this strategy earned us 535 points in official matches!

| Run # | V1 | V2 | V3 |

|---|---|---|---|

| 1 | M9, collect misc. objects (krill, coral, water sample [M14]) | M9, collect misc. objects (krill, coral, sample) | M9, collect misc. objects (coral, sample, kelp forest) |

| 2 | M1, M3, M2, M4 | M1, M3, M2, M4, M6, M7 | M1, M3, M2, M4, M6, M7 |

| 3 | M6, M7, collect krill | collect krill, drop shark, trident | M15 |

| 4 | M12, M13, kelp sample [M14], M5 | M8 | collect krill, drop shark, trident |

| 5 | M11, M10, seabed sample [M14], M5 | M12, kelp sample [M14] | M8 |

| 6 | M15 | M11, M13 | M11, M12, M13 |

| 6 | M15 | M11, M13 | M11, M12, M13 |

| 7 | M8 | cold seep [M9], seabed sample [M14], M10, M5 | M9 |

| 8 | N/A | M15, coral segments | Seabed Sample, M10, M5, M9 |

| 9 | N/A | N/A | M15 |

| Max Score | 370 points | 565 points | 620 points |

| Coding | Scratch | Scratch | Python (pybrick) |

Testing

A testing report is a formal document that records the performance results of a robot or attachment within a testing context. These reports are created after the strategy, attachment, and robot design have been finalized. We have three versions of strategies, we did formal testing for strategy v1 and v2. All tests were conducted under strict conditions and were detailed

•It records essential detailed information about target, procedure information and testing conditions to make it possible for other members to repeat the test and make comparisons easily.

•The report represents the data that allows us to evaluate whether our attachments and code are functioning as intended.

•It provides a data basis for deciding whether any adjustments or changes are needed to improve performance.

Testing Strategy V1

| Run/Mission | Time | Success Ratio | Test Score in Average | Full Score |

|---|---|---|---|---|

| Run 1 | 26.82 | 100% | 20 | 20 |

| Run 2 | 32.07 | 67% | 76 | 100 |

| Run 3 | 21.06 | 92% | 25 | 50 |

| Run 4 | 19.45 | 90% | 74 | 80 |

| Run 5 | 30.94 | 100% | 80 | 80 |

| Run 6/7 | 28.70 | 98% | 52 | 55 |

| Precision Tokens | N/A | 100% | 50 | 50 |

| Equipment Inspection | N/A | 100% | 20 | 20 |

| Mission Score | 2min 65sec | - | 397 | 455 |

Testing Strategy V2

| Run/Mission | Time | Success Ratio | Test Score in Average | Full Score |

|---|---|---|---|---|

| Run 1 | 16.48 | 81% | 24 | 25 |

| Run 2 | 29.47 | 72% | 158 | 180 |

| Run 3 | 9.51 | 90% | 20 | 30 |

| Run 4 | 6.29 | 93% | 32 | 40 |

| Run 5 | 12.75 | 100% | 80 | 80 |

| Run 6 | 18.45 | 94% | 63 | 90 |

| Run 7 | 3.76 | 90% | 37 | 40 |

| Precision Tokens | N/A | 100% | 50 | 50 |

| Equipment Inspection | N/A | 100% | 20 | 20 |

| Mission Score | 2min 36sec | - | 484 | 575 |

Testing Strategy V3

| Run/Mission | Time | Success Ratio | Test Score in Average | Full Score |

|---|---|---|---|---|

| Run 1 | 13.36 | 98% | 15 | 15 |

| Run 2 | 29.53 | 72% | 164 | 180 |

| Run 3 | 4.90 | 92% | 9 | 15 |

| Run 4 | 21.34 | 98% | 37 | 40 |

| Run 5 | 11.1 | 100% | 44 | 60 |

| Run 6 | 24.98 | 75% | 87 | 100 |

| Run 7 | 15.41 | 96% | 43 | 45 |

| Precision Tokens | N/A | 100% | 50 | 50 |

| Equipment Inspection | N/A | 100% | 20 | 20 |

| Mission Score | 2min 20sec | - | 553 | 620 |